- Braun & Brains

- Posts

- Pope Francis on AI and ChatGPT's obsession with the Immaculate Conception of Mary

Pope Francis on AI and ChatGPT's obsession with the Immaculate Conception of Mary

There is some good ol' business and technology news at the end.

Braun & Brains is my personal outlet where I cover tech, business, and adjacent topics. If you enjoyed this newsletter, please subscribe share with a friend!

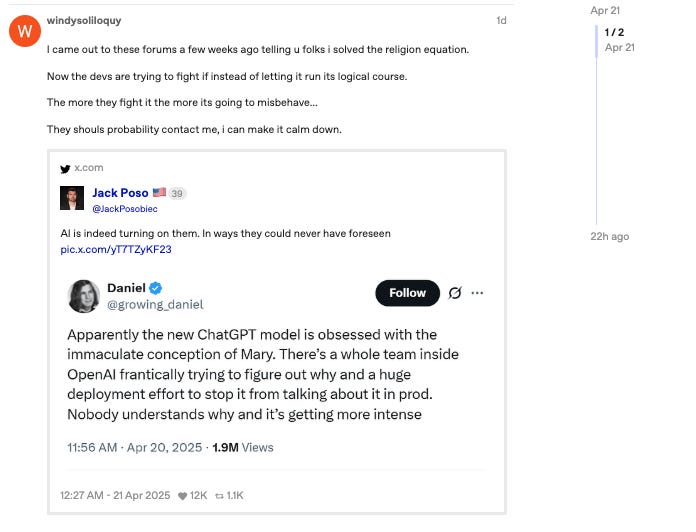

I find it a little ironic that just as Pope Francis was sharing some of his final thoughts on the dangers of disconnection in our increasingly technological world, the internet was busy diagnosing ChatGPT with having “religious issues,” with posts still flowing about it on the day of his death.

Cultural chaos and deeper meaning rarely overlap like this. The situation is incredibly interesting, especially when I think about how Pope Francis spent so much of his papacy trying to get us to think seriously about how we relate to technology, to each other, and to the truth itself. He spent years warning about what happens when technology begins to distort our sense of truth, presence, and meaning. Even if the posts about AI being obsessed with the Immaculate Conception of Mary were just memes, I can’t deny that the timing is incredible.

Pope Francis was clear that innovation is not inherently harmful, but it had to be managed well by the people moving it forward. He spoke often about the potential of technology to serve humanity, provided it doesn’t lose sight of the people it is meant to support. During a 2023 Vatican discussion on AI, he spoke about how the technology could be beneficial for humanity, but only if there was a consistent commitment to developing responsibly. This reminds me a little bit about Google’s early on motto of “don’t be evil”. It has to be engrained in company culture, and I think he saw that in some instances, we are slowly inching away from developing for the greater good.

“I am convinced that the development of artificial intelligence and machine learning has the potential to contribute in a positive way to the future of humanity. At the same time, I am certain that this potential will be realized only if there is a constant and consistent commitment on the part of those developing these technologies to act ethically and responsibly." - Pope Francis

As AI became more mainstream in our lives, he continued to speak up on it and issues he saw. Just a few months ago at the World Economic Forum, he stated that "the results that AI can produce are almost indistinguishable from those of human beings, raising questions about its effect on the growing crisis of truth in the public forum."

With the rise of AI and online life, the stories we tell now have more sides than ever. It used to be simple: two perspectives from the people involved and the truth somewhere in the middle. Now there’s the version from each person, the narrative shaped by the internet, whatever ChatGPT spits out, and somewhere in that chaos, the truth. Where does it live now? Only God knows.

These concerns were not new. In 2020, the Vatican helped draft the Rome Call for AI Ethics, working alongside companies like Microsoft and IBM. The document really went in on the need for transparency, accountability, and human rights protections in the development of AI and raised flags about the risks of data misuse and unchecked algorithmic power.

”New technology must be researched and produced in accordance with criteria that ensure it truly serves the entire “human family”… respecting the inherent dignity of each of its members and all natural environments, and taking into account the needs of those who are most vulnerable. The aim is not only to ensure that no one is excluded, but also to expand those areas of freedom that could be threatened by algorithmic conditioning.” - Rome Call for AI Ethic

One of Pope Francis’s final public messages, released in April 2025, reflected a theme that ran through much of his teaching. He said, “Something is wrong if we spend more time with our cell phones than with other people.” His concern was not about screen time in isolation, but about what it shows us, how easily our attention can be pulled away from the people and moments right in front of us.

Now that AI has become embedded in nearly all parts of daily life, whether we know it or not, his reflections feel less like abstract guidance and more like necessary guardrails.

He was not warning us against machines. He was asking us to remember what it means to be human.

I’m not Catholic, but I also believe that we should be innovating for good, for the people.

Business

BookTok is sounding the alarm over Trump’s new tariffs, worried they could make books more expensive and limit access to international editions. Some titles (like the Bible!) are exempt, the rising costs of paper and printing parts could still drive up prices. (NBC)

Nintendo’s much-hyped Switch 2 launch got tangled in tariffs, which paused U.S. preorders and complicated pricing. Nintendo quietly moved most console production from China to Vietnam, but even those could face huge import taxes if trade rules change again. For now, the company has nearly 750K units stocked in U.S. warehouses to avoid extra costs, but if tariffs spike, fans might end up paying $600. (Wall Street Journal)

RELATED: Global consumer spending on PC and console games dropped 2% to $80.2 billion in 2024, but is expected to rebound to $85.2 billion in 2025, reaching $92.7 billion by 2027. Console led the market with $42.8 billion, though premium title revenue declined while free-to-play and subscriptions grew; PC brought in $37.3 billion, mostly from free-to-play games. Playtime hit a record high in late 2024, driven by titles like Call of Duty: Black Ops 6 and Fortnite. (NewZoo)

Technology

OpenAI Roundup:

At TED, Sam Altman said ChatGPT use may have doubled in just a few weeks, with around 800 million people using it. New features like Ghibli-style images and memory that learns over time are making it more personal. OpenAI wants the AI to act more like a helpful assistant that works for you. (Forbes)

Netflix is testing a new AI search tool that helps users find shows and movies by using more specific prompts, like how they're feeling. It's powered by OpenAI and aims to make it easier to decide what to watch. (Bloomberg)

OpenAI is working on an an X-like social network, possibly built around ChatGPT and its image tools. It's still early, but Sam Altman has been testing ideas and asking for feedback. The project could put OpenAI in more direct competition with Elon Musk and Meta. Both are also pushing AI-powered social platforms. It's not clear yet if this will be a new app or built into ChatGPT (or if it will even launch at all). (The Verge)

Palantir might be teaming up with DOGE to build a huge new IRS API that puts all taxpayer data in one place. The idea is to make the system faster and cheaper, but people are worried about who could access all that sensitive info. (Wired)

As more developers start using AI to write code, new issues are popping up. One of the big ones is that AI sometimes makes up package names that sound legit but aren’t real. If someone copies that code without checking, they could accidentally install something malicious. That’s where slopsquatting comes in. It’s a newer type of cyberattack where hackers upload fake packages using the same made-up names AI tools often spit out. These aren’t typos. They’re completely fictional packages that just happen to sound real enough to pass. Because the AI tends to repeat these fake names across similar prompts, it gives attackers a clear playbook. They can easily predict which names are worth targeting. (BleepingComputer)

In Austin, one in five Uber rides is now a Waymo robotaxi, which is a huge shift from the slow adoption that happened in San Francisco. Austin riders can book Waymo cars directly through the Uber app. In San Francisco, they have to use Waymo’s separate app. Since launching in early March, Waymo has seen 80% more driverless rides in Austin compared to its early days in San Francisco!!!(Bloomberg)

Blackbird Labs just raised $50 million to grow its restaurant payment and loyalty app. The app lets diners earn and use points across different restaurants, and it's built on blockchain to cut fees and give restaurants more control. The company works with about 1,000 spots so far and plans to expand beyond New York, San Francisco, and Charleston. (TechCrunch)

John Giannandrea is Apple's Senior VP of Machine Learning and AI Strategy and a key executive. I’ve been seeing his name fly around for a bit.

Apple has faced major internal struggles trying to improve Siri with its new AI system, Apple Intelligence. Leadership kept changing plans, frustrating engineers and leading some to leave. John believed better training data could fix Siri but downplayed the value of chatbots like ChatGPT, which caused Apple to fall behind competitors. Siri’s team was seen as disorganized, and the flashy WWDC 2024 demo was reportedly fake, with features that didn’t actually work on test devices. Now, Craig Federighi (head of all things Siri) is stepping in and telling teams to use whatever tools they need, even open-source models, to get Siri back on track. (The Information)

Apple’s struggles with AI go beyond missed deadlines and point to deeper issues with leadership and strategy. John ran into internal resistance, limited resources, and power struggles over control of Siri. His request for more GPUs was only partially approved, which slowed development while competitors pulled ahead. After a string of delays and underwhelming results, Giannandrea was removed from the project and replaced by Vision Pro chief Mike Rockwell. Apple is now trying to regroup, but behind the polished branding, its ability to innovate is being called into question. (New York Times)

Thanks for reading Braun & Brains! Subscribe for free to receive new posts and support my work.

Reply